Experience with Generative AI and Precognition

by Zaq Stavano – July 5, 2023

In the early days of the Predictions Competition, I started generating prediction options using formulas on a spreadsheet filled with random People, Places, and Things. Each week, I would select three items from each category and publish them for the participants to vote on. Over time, I refined the prediction options and the image reveal process to make them more universally understandable in a precognitive sense. During Week 9 of the competition, I encountered an incident where the target person, Charlie Brown, and the target thing, a Ball, both appeared as round circles. This resulted in a decrease in accuracy compared to the previous week, with only 22% of participants accurately predicting Charlie Brown (a 17% decrease) and 29% accurately predicting the Ball (a 7% decrease). It highlighted the need for more distinct elements in the predictions to ensure accurate precognition.

In January 2023, I introduced generative AI into the prediction process. At first, I engaged in conversations with ChatGPT, seeking its input and ideas for prediction options. However, I found that this approach didn’t yield the best choices. I then shifted to simpler prompts, asking the AI to list random people, places, and objects. To my surprise, the AI’s suggestions resulted in remarkable outcomes, leading to a significant increase in accurate predictions by the majority of participants.

During the initial two weeks of incorporating AI assistance (Weeks 27 and 28), the competition witnessed a notable surge in overall accuracy. In Week 27, out of the 87 participants, 43% accurately predicted an Archaeologist, marking a 15% improvement from the previous week. Additionally, 39% of the 79 players accurately predicted Tuscany, and 41% of the 73 players accurately predicted Toothbrush.

This positive trend continued into Week 28, where 45% of the 93 participants accurately predicted Lawyer, 40% of the 90 participants accurately predicted Grand Canyon, and 40% of the 79 participants accurately predicted Piano.

I formed a close collaboration with the AI, discussing the competition and seeking its advice on how to improve the system. Whenever I noticed elements that were not being well precognized, I took notes and shared them with the AI, asking for suggestions on generating better options. This collaborative approach really helped in refining the prediction process.

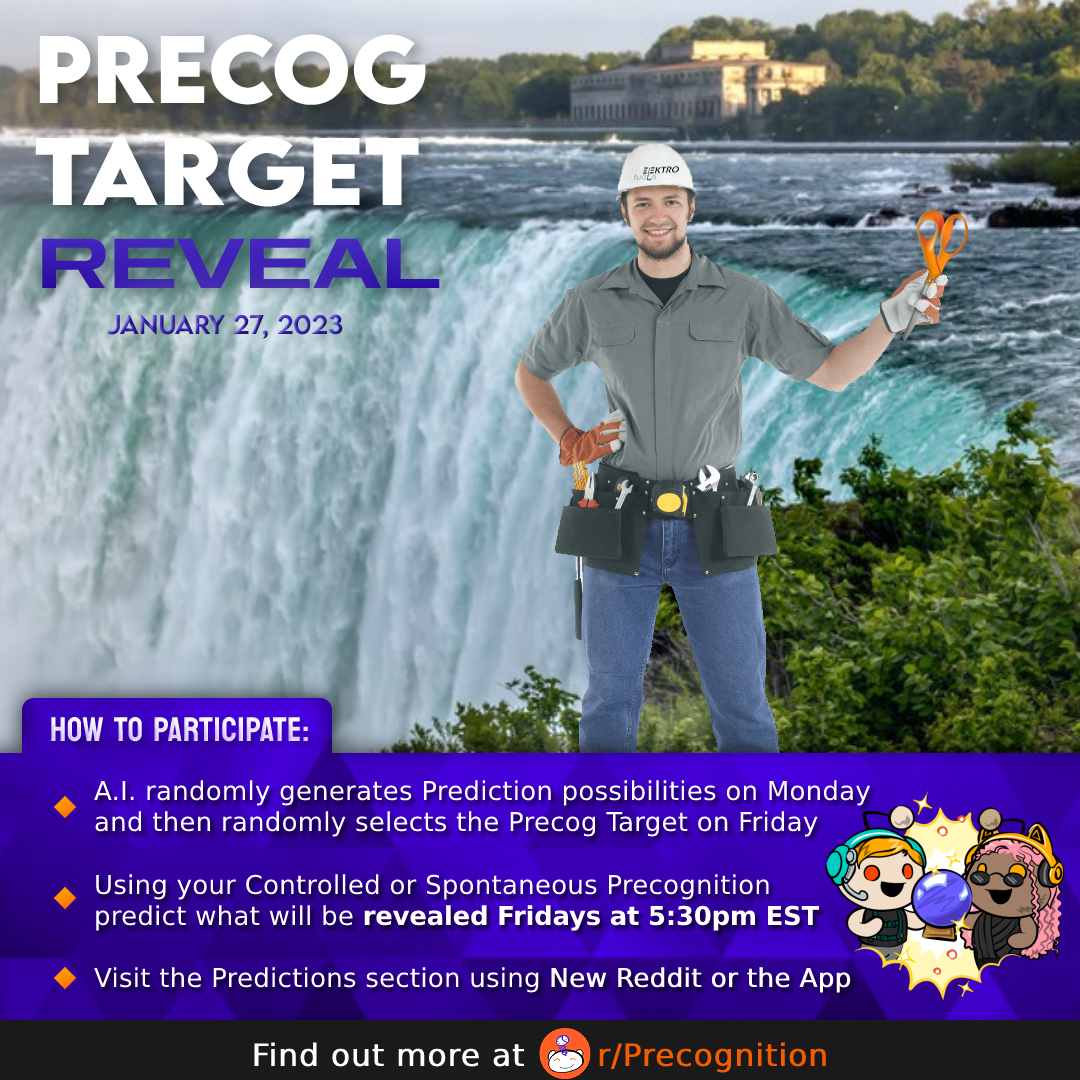

In Week 29 of the competition, there was a noticeable decline in accuracy among participants, marking the first time in a while that most players failed to make accurate predictions. Accuracy for both the person and thing, which were Electrician and Scissors that week, decreased by 15%. Additionally, accuracy for the place, Niagara Falls, decreased by 11%. Seeking guidance, I turned to the AI and implemented some of its suggestions, such as a simplified instructions overlay, a recognizable logo, and a visual guide specifically for the target elements.

The implementation of these ideas led to two weeks of improved accuracy in the competition (Weeks 30 and 31). In Week 30, out of 115 participants, 40% accurately predicted Audrey Hepburn, showcasing a 10% increase from the previous week. Among 105 participants, 33% accurately predicted The Colosseum, which marked a 4% improvement. Additionally, 33% of the 101 participants accurately predicted a Statue, reflecting an 8% increase in accuracy from the previous week. Week 31 also saw positive results, as I observed accurate predictions from 41% of the 91 participants for an Elephant, 41% of the 80 participants for a Zoo, and 35% of the 79 participants for Pizza.

However, it became evident that some of the AI’s suggestions, such as adding mystical text effects and a dreamlike ambiance to the image reveal, hindered participants’ precognition instead of enhancing it. It took several weeks for me to realize this mistake and adapt the approach. This realization coincided with an observation of above-average accuracy for people and places, but an overall decrease in weekly accuracy growth during weeks 32-35, which aligned with the additional changes I had made.

To address these concerns, I sought feedback from the community through a survey in Week 33. The feedback proved invaluable, as I discovered that each week should have a different aesthetic, and mystical effects were not conducive to accurate precognition. The subsequent weeks allowed us to fine-tune the adjustments, gradually restoring participant accuracy to normal and above-average levels.

However, in recent months, the reliability of the AI has declined compared to its peak performance. It started generating bizarre options, displayed less randomness, and even contradicted itself. Moreover, its occasional skepticism toward precognition became gradually more evident during conversation. To address this, I revisited my spreadsheets and perfected the randomization formulas, creating a new system that ensures accurate and dependable predictions.

At present, I only rely on Generative Images for assistance with the artistic side of the precog target image reveal. I provide AI with the target person, place, and thing, and use the first image that has a clear depiction of all three. While AI-generated art does have its limitations, I’ve found ways to work around them, such as manually fixing certain aspects in Photoshop.

In conclusion, generative AI has provided valuable suggestions & enhanced accuracy of predictions, but a noticeable decline in its performance means formulas remain supreme. Nonetheless, the competition continues to engage participants and foster the exploration of their precognitive abilities.